Models Are Essential for Banks, but How Do We Know They’re Right?

Banks are dependent upon models of all kinds. This is because reality is much too complex for us to understand well enough for perfect predictions of the future. Models are used as a simplification of reality.

The problem is that this introduces uncertainty, which means risk. Regulators are very concerned about model risk. They require that banks manage it very carefully and transparently.

What is a Model?

In my college economics classes, I was incredibly irritated by the concept of “perfect competition”. This posits lots of suppliers, lots of buyers, and perfect information about supply, demand and prices. This seemed like a ridiculous oversimplification of the complexities of trade. Yet it allowed a basic understanding of how prices tend to rise with increased demand, and fall with increased supply. This was a truth that on its own wasn’t terribly useful. But it helped explain an important principle among those that drive marketplace economics.

Perfect competition in fact describes assumptions that are input to a model. Add some data about historical supply, demand, and prevailing prices, and it is possible to create a model. This is a calculation process that will predict what will happen to prices if supply and demand change over time.

All Models Are Wrong …

The brilliant British statistician George Box said (in 1976 and several times after that, though he wasn’t the first):

"Essentially, all models are wrong, but some are useful.”

What Box states is a truism. Since a model is a simplification of reality, it essentially has to be wrong. The past is not a perfect predictor of the future. Assumptions used to simplify a model eliminate various aspects of reality’s complexity. And so unexpected results must be expected.

Unexpected results are the primary target of risk management. What is needed of a model is a “reasonable” probability that actual results will not deviate from predicted results by more than an “acceptable” margin. An organization must define for itself "reasonable” and “acceptable” in its particular business context. These definitions make up the risk appetite of the model owner. They define how far assumptions can be used, and how conservative must be any rules that drive action from the results.

Regulatory Expectations

The rather more comprehensive OCC definition of a model is:

“…a quantitative method, system, or approach that applies statistical, economic, financial, or mathematical theories, techniques, and assumptions to process input data into quantitative estimates. A model consists of three components: an information input component, which delivers assumptions and data to the model; a processing component, which transforms inputs into estimates; and a reporting component, which translates the estimates into useful business information."

In the FRB Supervisory Letter SR 11-7, issued by the OCC in April 2011, banks are required to manage model risk. The following major elements are considered (rightly) to be necessary:

- Maintenance of an enterprise model inventory

- Documentation of model in an accessible repository

- Assumptions

- Formulae

- Data sources and uses

- Business use

- Independent validation

- Clear policies for model development

They are mostly self-explanatory, except that it is often not clear how to perform independent validations of models. SR 11-7 makes very clear that this is absolutely required for any model on which a bank’s results depend. It covers all categories of risk. This includes market risk, credit risk and (perhaps hardest of all) operational risk.

The OCC guidance includes a guiding principle of “effective challenge” of models. This is defined as “critical analysis by objective, informed parties who can identify model limitations and assumptions and produce appropriate changes”.

Independent Model Validation

Independent validation doesn’t necessarily require that a third party be engaged to validate models. What it does require is that a model should be validated by parties that have no stake in the success or failure of the model. They should also have had no hand in its construction.

If an independent party competent to validate a model can be found inside the Bank, then it will obviously be less expensive to use them. Even so, the validation process should be reviewed by internal audit and external regulators. For more complex models, it can be difficult to find the right level of technical expertise outside the group that created the model.

In common Enterprise Risk Management terms, there is the need for three lines of defense.

First line of defense: model builders carrying out their own testing.

Second line of defense: independent validation of the model.

Third line of defense: audit or regulatory validation of the overall model development, testing and validation process.

Model validation looks at the quality of the following model elements:

- Theory behind the model, and documentation of its applicability. This is particularly important for third party vendor models.

- Model documentation.

- How the model is implemented in production, including protection from unauthorized change.

- Data inputs, including historical time series and variables.

- Assumptions regarding anticipated future influencers of results.

- Outputs actually created by the model.

SR 11-7 identifies three essential elements of an effective validation framework

- Evaluation of conceptual soundness, including development evidence. At the time of a model’s development, before it is deployed, it needs to be tested. Its effectiveness must be demonstrated through prototyping, benchmarking and/or piloting.

- Ongoing monitoring, including process verification and benchmarking. While the model is in use, the reality being modelled may change, or assumptions may need to be refined. There needs to be something against which to compare results. This will ideally be a benchmark, or at least a qualitative review of trends.

- Outcomes analysis, including back-testing. Predicted outcomes need to be verified against actual results. This will determine whether the model is operating within acceptable error ranges. Even better is back-testing, in which we ask what we would have predicted “if we had known then what we know now”.

Benchmarking

Two of the recommended approaches are particularly effective when an alternative model can be used as a benchmark. If the bank’s model performs consistently with, or better than, a well-proven alternative model, then confidence in it can be greatly increased.

- For ongoing monitoring, benchmarking involves running the bank’s production model in parallel with a regulator-approved reference model. Depending on the type of model in use, results from the bank’s custom model should come close to matching results from the reference model. Or else they should match or improve on the results from the reference model.

- In back-testing, the actual results are compared with the model’s predictions. One challenge is determining the cause of deviations. Were they the result of unpredictable or random events, or of flaws in the model? One approach would be to run the historical data through the reference model. This would show that the bank’s custom model is working as planned (or not).

Example from AML Monitoring

This can be illustrated using a hypothetical AML monitoring reference model. Most current production bank models are rules-based. They encapsulate “expert” understanding of transaction characteristics that indicate a suspicious transaction. For example, an expert would become suspicious if more than one cash deposit of close to $10,000 occurred in a day across different channels. This would indicate a possible intent of a criminal to fly under the large currency reporting rule.

A reference model could be built using different technology, such as big data analytics based on industry-wide data. In a bank production environment, machine learning could also improve results over time, based upon actual bank experience. So the reference model would represent a baseline or benchmark that would provide the minimum performance level that a bank should achieve with its own model.

There are two primary issues with AML Monitoring operations. The first, and most obvious, one is the possibility of allowing a suspicious transaction to pass through without being reported. Banks are required to file Suspicious Activity Reports whenever they have reasonable doubt about the legitimacy of a transaction from a money laundering or terrorist financing perspective. If they fail to report transactions that turn out to be related to these illegal activities, they are subject to regulatory sanctions. If regulators find a pattern of missed transactions, banks can be fined massive amounts.

It is therefore critically important to miss nothing. So rules-based systems are typically tuned very conservatively. This results in large volumes of “false positives”. In a false positive, a perfectly legitimate transaction is flagged as suspicious. In some banks, 95% or more of transactions flagged as suspicious are in fact legitimate. But each of them needs to be manually reviewed. This in itself is risky, because of what the health care industry (amongst others) calls “alarm fatigue”. An operator who is predisposed to expect that a flagged transaction is in fact almost certainly legitimate, is more likely to miss a real suspect.

When an AML monitoring model is benchmarked, the bank’s custom model should exhibit two characteristics:

- Equal or fewer missed suspicious transactions than the reference model. This applies when the models run in parallel during ongoing monitoring. It also applies in back-testing. This would involve running historical data through the reference model. This is to ensure it wouldn’t have caught any suspects that the custom model missed.

- Equal or fewer false positives than the reference model. Again this applies either during ongoing monitoring or back testing.

Beating the Reference Model

The goal for every bank should be to beat the reference model. This is important to ensure that the bank’s custom model will be approved by regulators. But it is also important for reducing risk and cutting operational expense (for example in the AML monitoring case of excessive false positives).

Consistently and demonstrably reducing risk will pay tremendous dividends in the long term. Over the past several years, regulators have increasingly imposed a risk-related cost for banks. This is the setting aside of capital to insure against risk-related losses.

Capital requirements have been imposed for decades to offset credit risk. The higher the risk of an asset portfolio, the greater the capital requirement. This has also been applied to market risk for some time (e.g. Basel II included consideration of derivatives and other risky market instruments). In principle operational risk was also included, though with inadequate definition. More recently, due to the 2008 financial crisis, capital requirements are being further tightened particularly in Basel III.

It is reasonable to expect that international and national regulators will be looking for demonstration of operational risk management. They are likely to impose more restrictive capital requirements on those banks that cannot demonstrate the predictability and reliability of their risk models.

So the goal for banks should be the use of emerging technologies and processes that will not just improve models, but will provide proof of such improvement. If this will result in lower capital requirements (for example reduced reserves against AML risk), then it will pay for itself many times over.

Conclusion - Bring Them On!

A series of reference models would be very valuable for regulators, banks, model validators, and even software developers. Most model developers are trying to build models that are better than anybody else’s. But there is a strong argument for building models that can define a baseline of reasonable expectations from a regulatory perspective. This is a great example of RegTech at its best.

I am aware of at least one, as yet unannounced, set of partnership discussions in the AML monitoring space. The interesting part is that while what is being talked about is a reference model, this solution is based also upon machine learning. This allows any bank using the model to start at the reference baseline and improve over time.

Here's the challenge to RegTech model builders. Build a model that will serve as an industry reference model. And build it in such a way that a bank's experience or knowledge will make it even better. You’ll make everybody happy (including your investors).

Posts by Tag

- big data (41)

- advanced analytics (38)

- business perspective solutions (30)

- predictive analytics (25)

- business insights (24)

- data analytics infrastructure (17)

- analytics (16)

- banking (15)

- fintech (15)

- regulatory compliance (15)

- risk management (15)

- regtech (13)

- machine learning (12)

- quantitative analytics (12)

- BI (11)

- big data visualization presentation (11)

- community banking (11)

- AML (10)

- social media (10)

- AML/BSA (9)

- Big Data Prescriptions (9)

- analytics as a service (9)

- banking regulation (9)

- data scientist (9)

- social media marketing (9)

- Comminity Banks (8)

- financial risk (8)

- innovation (8)

- marketing (8)

- regulation (8)

- Digital ID-Proofing (7)

- data analytics (7)

- money laundering (7)

- AI (6)

- AI led digital banking (6)

- AML/BSA/CTF (6)

- Big Data practicioner (6)

- CIO (6)

- Performance Management (6)

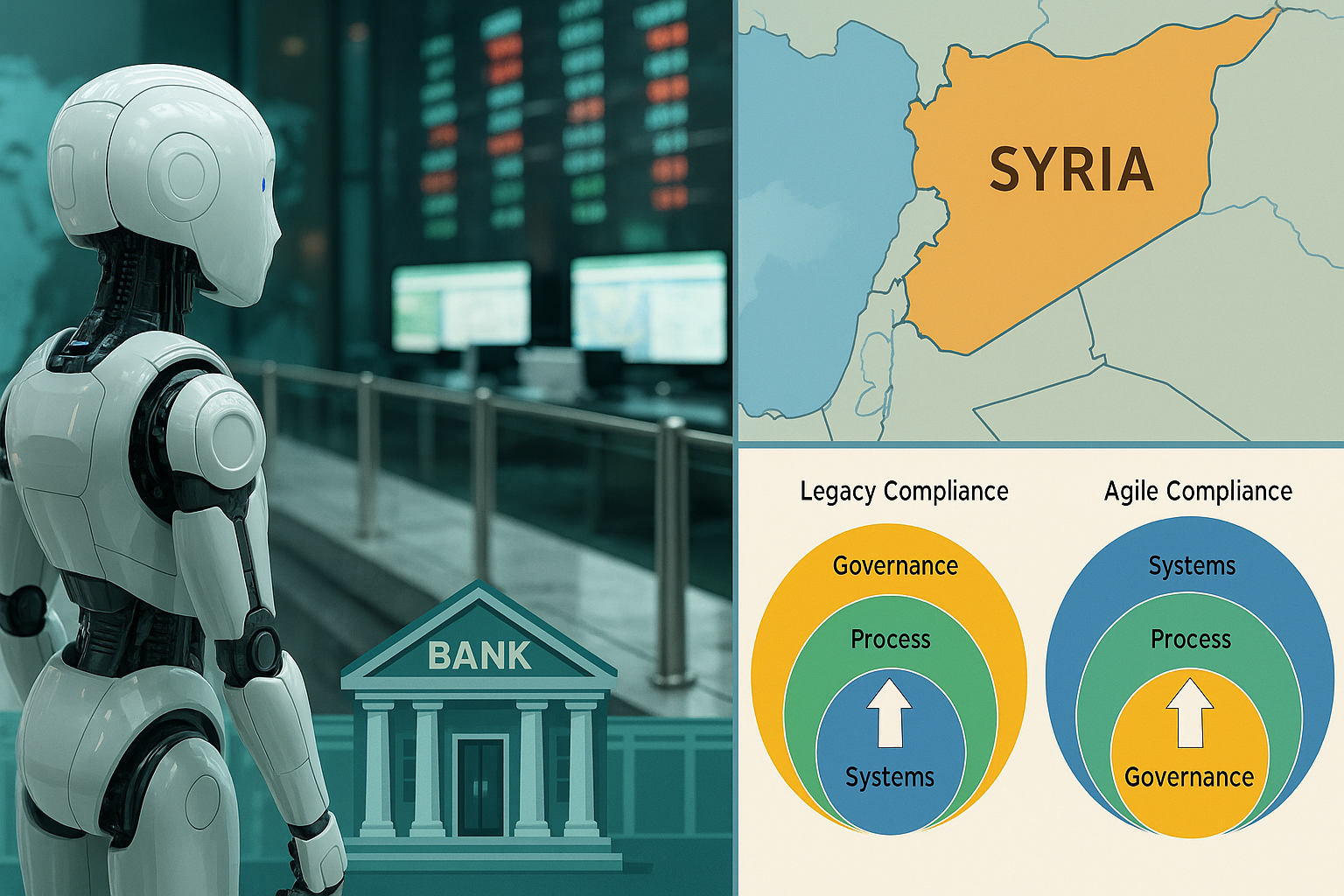

- agile compliance (6)

- banking performance (6)

- digital banking (6)

- visualization (6)

- AML/BSA/CFT (5)

- KYC (5)

- data-as-a-service (5)

- email marketing (5)

- industrial big data (5)

- risk manangement (5)

- self-sovereign identity (5)

- verifiable credential (5)

- Hadoop (4)

- KPI (4)

- MoSoLoCo (4)

- NoSQL (4)

- buying cycle (4)

- identity (4)

- instrumentation (4)

- manatoko (4)

- mathematical models (4)

- sales (4)

- 2015 (3)

- bitcoin (3)

- blockchain (3)

- core banking (3)

- customer analyitcs (3)

- direct marketing (3)

- model validation (3)

- risk managemen (3)

- wearable computing (3)

- zero-knowledge proof (3)

- zkp (3)

- Agile (2)

- Cloud Banking (2)

- FFIEC (2)

- Internet of Things (2)

- IoT (2)

- PPP (2)

- PreReview (2)

- SaaS (2)

- Sales 2.0 (2)

- The Cloud is the Bank (2)

- Wal-Mart (2)

- data sprawl (2)

- digital marketing (2)

- disruptive technologies (2)

- email conversions (2)

- mobile marketing (2)

- new data types (2)

- privacy (2)

- risk (2)

- virtual currency (2)

- 2014 (1)

- 2025 (1)

- 3D printing (1)

- AMLA2020 (1)

- BOI (1)

- DAAS (1)

- Do you Hadoop (1)

- FinCEN_BOI (1)

- Goldman Sachs (1)

- HealthKit (1)

- Joseph Schumpeter (1)

- Manatoko_boir (1)

- NationalPriorites (1)

- PaaS (1)

- Sand Hill IoT 50 (1)

- Spark (1)

- agentic ai (1)

- apple healthcare (1)

- beneficial_owener (1)

- bsa (1)

- cancer immunotherapy (1)

- ccpa (1)

- currency (1)

- erc (1)

- fincen (1)

- fraud (1)

- health app (1)

- healthcare analytics (1)

- modelling (1)

- occam's razor (1)

- outlook (1)

- paycheck protection (1)

- personal computer (1)

- sandbox (1)

Recent Posts

Popular Posts

Here is a funny AI story.

Every community bank CEO now faces unprecedented...

On May 13, 2025, the U.S. government announced...