Occam's Razor and Managing Model Risk

Introduction

Our dependence on mathematical models to calculate risk has provided an incentive to make model risks more transparent. Models measuring financial risk (e.g. market risk in the trading book, credit risk in the wholesale and consumer lending books, etc) and operational risk (e.g. AML risk, etc) can be misleading. They can be misapplied, fed the wrong input and provide results which are too often misinterpreted.

Firms need to guard against models being either too complex or too simple. The goal in an application is to design models that fit data well and provide the best out-of-sample prediction. How do you know if a model is too complex? In general, a practical and tangible way to compare the complexity of models is to construct and examine a list of transparent factors such as the number of assumptions, the compute time, the number of parameters to be estimated, etc. But, to quote others, ‘I know model complexity when I see it, everything should be as simple as possible but not simpler!’ Think also of how long it will take you to explain the model to senior management.

External Peer Review

An important way to control model risk is to establish a formal external peer review process for vetting mathematical models. An external peer review serves to provide an independent and external assurance that the mathematical model is reasonable. The external peer review would include but not be limited to examining:

1) What is the theory behind the model? For example:

- What assumptions are being made for the model to apply?

- Are there equally compelling theories?

- Which theory is the most parsimonious, or empirically testable

- Why was this theory chosen?

- Is the model specification unique or is it a representative of an equivalence class?

2) What parameters need to be calibrated? For example:

- Given sufficient data, is the calibration unique or are there multiple possible calibrations?

- How sensitive are model extrapolations to parameter choice?

- How are the parameters estimated?

- What statistical inference, optimization, or utility is being used and why?

3) Does the chosen model answer the question?

- A model may be parsimonious, robust, elegant and predictive. Nevertheless, it may be applied to a problem for which assumptions are materially violated.

- A simple model applied judiciously with caveats may work better than a more sophisticated model applied blindly. In any event, judgment and prudence are needed by the user for appropriate application.

- All models are wrong at some level of abstraction since they are imperfect representations of reality and do not capture all the relevant frictions.

4) Does the software actually implement the chosen model?

- How do you know?

- Does it correctly calculate known cases?

- What numerical approximations are implicit in the code?

- When does the algorithm used to implement a model break down, take too long, or hit a phase transition?

5) How can the theory, model, code and calibration be improved over time?

- What diagnostics are needed to monitor model performance?

- When things don't work is it obvious. Why is it not working? If not clear, then document more clearly.

- Compare to a benchmark model. If the model to be vetted does not agree with a benchmark model then learn why not and determine when and how to trust the results on the basis of theory, model assumptions, calibration, calculation algorithms and coding.

The external peer review process should be harmonized with an internal vetting process.

Internal Vetting Process

The internal vetting process should be constructed to include but not be limited to:

1. Model Documentation. A vetting book should be constructed to include full documentation of the model, including both the assumptions underlying the model and its mathematical expression. This should be independent from any particular implementation (e.g. the type of computer code) and should include a term sheet (or a complete description of the transaction).

A mathematical statement of the model should include an explicit statement of all the components of the model (e.g. variables and their processes, parameters, equations, etc). It should also include the calibration procedure for the model parameters. Implementation features such as inputs and outputs should be provided along with a working version of the implementation.

2. Soundness of model. The internal model vetting process needs to verify that the mathematical model produces results that are a useful representation of reality. At this stage, the risk manager should concentrate on the financial aspects and not become overly focused on the mathematics. Risk management model builders need to appreciate the real-world financial aspects of their models as well as define their value within the organizations they serve. Risk management model builders also need to communicate limitations or particular uses of models to senior management who may not know all the technical details. Models can be used more safely in an organization when there is a full understanding of their limitations.

3. Independent access to data. The internal model vetter should check that the middle office has independent access to an independent database to facilitate independent parameter estimation.

4. Benchmark modeling. The internal model vetter should develop a benchmark model based on the assumptions that are being made and on the specifications of the deal. The results of the benchmark test can be compared with those of the proposed model

5. Formal treatment of model risk. A formal model vetting treatment should be built into the overall risk management procedures and it should call for periodically reevaluating models. It is essential to monitor and control model performance over time.

6. Stress-test the model. The internal model vetter should stress-test the model. For example, the model can be stress-tested by looking at some limit scenario in order to identify the range of parameter values for which the model provides accurate pricing.

The evolution towards sophisticated financial mathematics is an inevitable feature of modern financial risk management and model risk is inherent in the use of models. Firms must avoid placing undue blind faith in the results offered by models, and must hunt down all the possible sources of inaccuracy in a model. In particular, they must learn to think through situations in which the failure of a mathematical model might have a significant impact. The board and senior managers need to be educated on the dangers associated with failing to properly vet mathematical models. They also need to insist that model risk is made transparent, and that all models are independently vetted and governed under a clearly articulated peer review process.

Posts by Tag

- big data (41)

- advanced analytics (38)

- business perspective solutions (30)

- predictive analytics (25)

- business insights (24)

- data analytics infrastructure (17)

- analytics (16)

- banking (15)

- fintech (15)

- regulatory compliance (15)

- risk management (15)

- regtech (13)

- machine learning (12)

- quantitative analytics (12)

- BI (11)

- big data visualization presentation (11)

- community banking (11)

- AML (10)

- social media (10)

- AML/BSA (9)

- Big Data Prescriptions (9)

- analytics as a service (9)

- banking regulation (9)

- data scientist (9)

- social media marketing (9)

- Comminity Banks (8)

- financial risk (8)

- innovation (8)

- marketing (8)

- regulation (8)

- Digital ID-Proofing (7)

- data analytics (7)

- money laundering (7)

- AI (6)

- AI led digital banking (6)

- AML/BSA/CTF (6)

- Big Data practicioner (6)

- CIO (6)

- Performance Management (6)

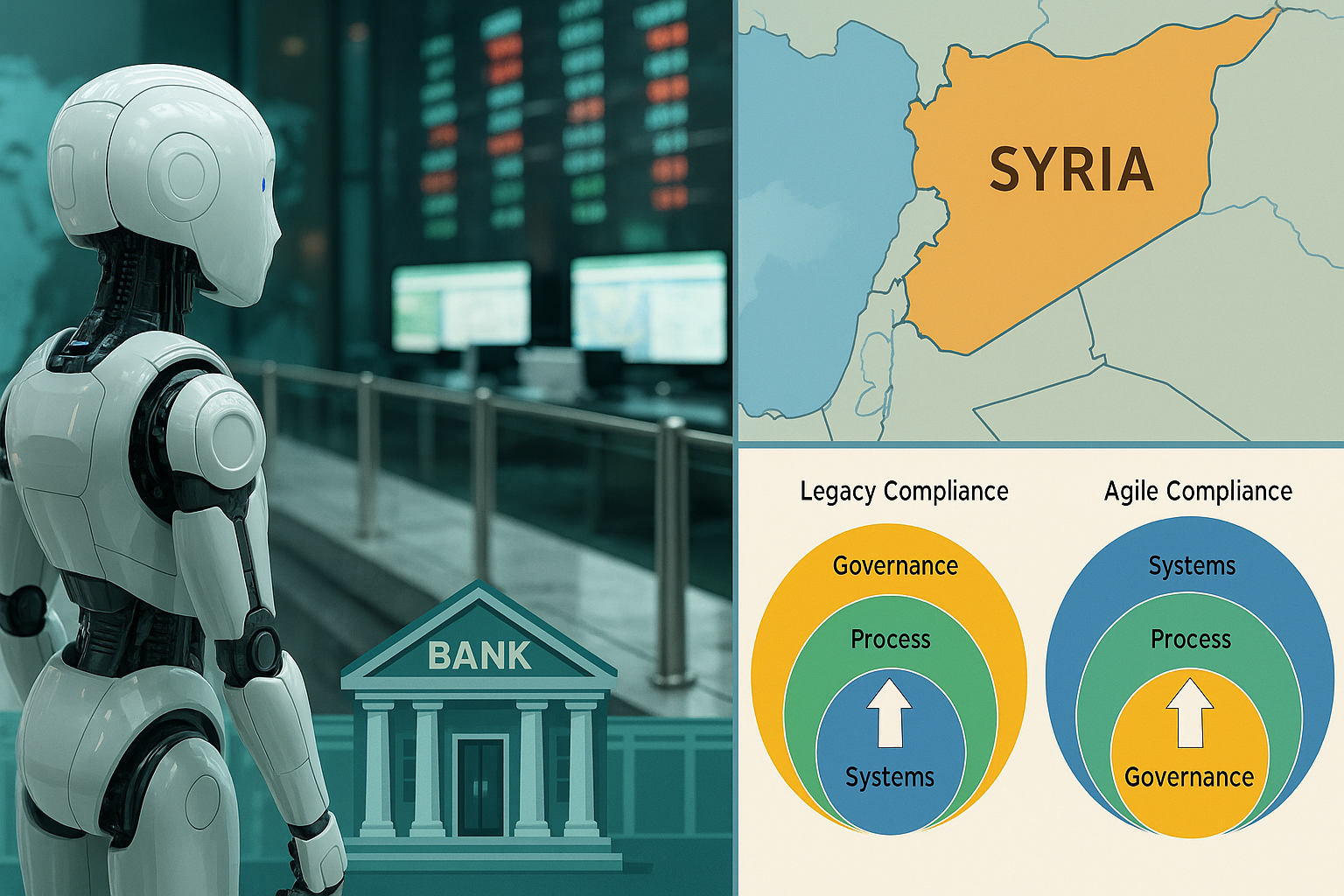

- agile compliance (6)

- banking performance (6)

- digital banking (6)

- visualization (6)

- AML/BSA/CFT (5)

- KYC (5)

- data-as-a-service (5)

- email marketing (5)

- industrial big data (5)

- risk manangement (5)

- self-sovereign identity (5)

- verifiable credential (5)

- Hadoop (4)

- KPI (4)

- MoSoLoCo (4)

- NoSQL (4)

- buying cycle (4)

- identity (4)

- instrumentation (4)

- manatoko (4)

- mathematical models (4)

- sales (4)

- 2015 (3)

- bitcoin (3)

- blockchain (3)

- core banking (3)

- customer analyitcs (3)

- direct marketing (3)

- model validation (3)

- risk managemen (3)

- wearable computing (3)

- zero-knowledge proof (3)

- zkp (3)

- Agile (2)

- Cloud Banking (2)

- FFIEC (2)

- Internet of Things (2)

- IoT (2)

- PPP (2)

- PreReview (2)

- SaaS (2)

- Sales 2.0 (2)

- The Cloud is the Bank (2)

- Wal-Mart (2)

- data sprawl (2)

- digital marketing (2)

- disruptive technologies (2)

- email conversions (2)

- mobile marketing (2)

- new data types (2)

- privacy (2)

- risk (2)

- virtual currency (2)

- 2014 (1)

- 2025 (1)

- 3D printing (1)

- AMLA2020 (1)

- BOI (1)

- DAAS (1)

- Do you Hadoop (1)

- FinCEN_BOI (1)

- Goldman Sachs (1)

- HealthKit (1)

- Joseph Schumpeter (1)

- Manatoko_boir (1)

- NationalPriorites (1)

- PaaS (1)

- Sand Hill IoT 50 (1)

- Spark (1)

- agentic ai (1)

- apple healthcare (1)

- beneficial_owener (1)

- bsa (1)

- cancer immunotherapy (1)

- ccpa (1)

- currency (1)

- erc (1)

- fincen (1)

- fraud (1)

- health app (1)

- healthcare analytics (1)

- modelling (1)

- occam's razor (1)

- outlook (1)

- paycheck protection (1)

- personal computer (1)

- sandbox (1)

Recent Posts

Popular Posts

Here is a funny AI story.

Every community bank CEO now faces unprecedented...

On May 13, 2025, the U.S. government announced...